6.9 KiB

6.9 KiB

generic vDPA

介绍

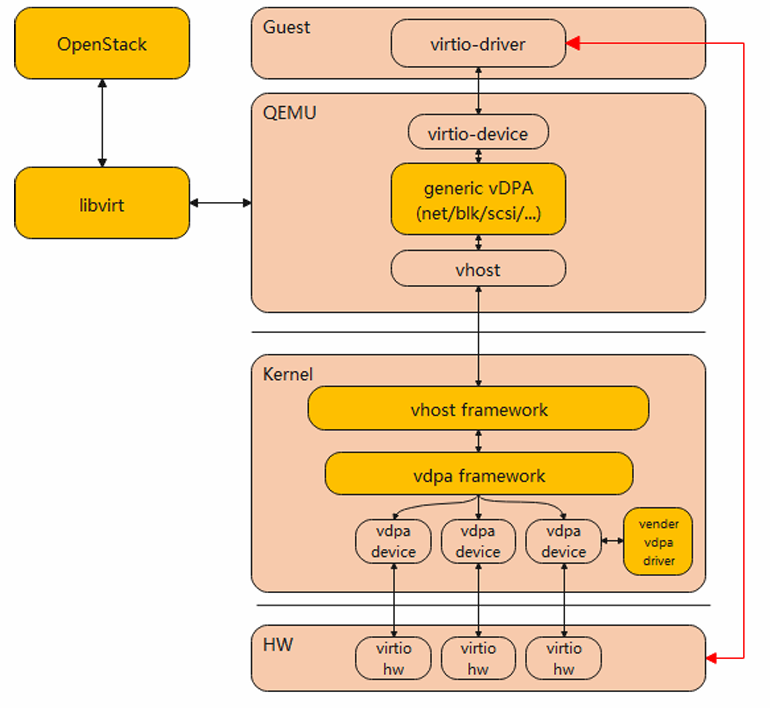

vDPA是一种基于VirtIO 半虚拟化技术的提出的数据面加速的框架。

DPU卡呈现的VirtIO类型的存储/网络/文件系统设备通过对接vDPA框架,提供与硬件持平的IO性能;并通过统一上层管理的接口,实现多种设备类型、多种DPU卡的接口统一。

软件架构

部署教程

目录说明:

- kernel:generic vdpa基本功能及热迁移依赖的内核代码

- qemu: generic vdpa设备基本功能及热迁移依赖的qemu代码

- libvirt: generic vdpa设备管理及支持生命周期依赖的libvirt代码

- doc: 项目资料等

generic vDPA当前开源社区尚未完全支持,因此需要在开源软件的基础上,打上补丁代码来支持相关功能,主要涉及的有三个开源软件:kernel、qemu、libvirt。

基于kernel-6.6、qemu-8.2.0、libvirt-9.10.0版本进行软件编译以及安装。

内核编译以及安装:

1. cd kernel

2. wget -O kernel-6.6.tar.gz https://codeload.github.com/torvalds/linux/tar.gz/refs/tags/v6.6

3. tar xvf kernel-6.6.tar.gz & cd linux-6.6

4. for p in $(ls ../*.patch); do patch -p 1 -F 0 < $p; done

5. 打开内核VHOST_VDPA的编译选项

6. make -j64 && make modules_install -j64 && make install

qemu编译及安装:

1. cd qemu

2. tar xvf qemu-8.2.0.tar.gz && cd qemu-8.2.0

3. for p in $(ls ../*.patch); do patch -p 1 -F 0 < $p; done

4. ./configure --target-list=aarch64-softmmu --enable-kvm --enable-vhost-vdpa

5. make -j64 && make install

libvirt编译及安装:

1. cd libvirt

2. tar xvf libvirt-9.10.0.tar.gz && cd libvirt-9.10.0

3. meson setup build -Dsystem=true -Ddriver_qemu=enabled -Ddriver_lxc=disabled -Dlogin_shell=disabled

4. ninja -C build

5. ninja -C build install

操作方法

请使用root用户按照如下操作步骤配置

-

开启网卡SRIOV模式:

-

在HostOS的BIOS中开启SMMU的支持,不同厂家服务器的开启可能不同,请参考各服务器的帮助文档。

-

HostOS中对需要配置给虚拟机的PF配置SRIOV,创建VF,以virtio-net设备为例

# lspci -s 83:00.6 83:00.6 Ethernet controller: Virtio: Virtio network device # echo 16 > /sys/bus/pci/devices/0000\:83\:00.6/sriov_numvfs # lspci | grep Virtio 83:00.6 Ethernet controller: Virtio: Virtio network device 83:01.1 Ethernet controller: Virtio: Virtio network device 83:01.2 Ethernet controller: Virtio: Virtio network device 83:01.3 Ethernet controller: Virtio: Virtio network device 83:01.4 Ethernet controller: Virtio: Virtio network device 83:01.5 Ethernet controller: Virtio: Virtio network device 83:01.6 Ethernet controller: Virtio: Virtio network device 83:01.7 Ethernet controller: Virtio: Virtio network device 83:02.0 Ethernet controller: Virtio: Virtio network device 83:02.1 Ethernet controller: Virtio: Virtio network device 83:02.2 Ethernet controller: Virtio: Virtio network device 83:02.3 Ethernet controller: Virtio: Virtio network device 83:02.4 Ethernet controller: Virtio: Virtio network device 83:02.5 Ethernet controller: Virtio: Virtio network device 83:02.6 Ethernet controller: Virtio: Virtio network device 83:02.7 Ethernet controller: Virtio: Virtio network device

-

-

解绑VF驱动,并绑定对应硬件的厂商vdpa驱动

echo 0000:83:01.1 > /sys/bus/pci/devices/0000\:08\:01.1/driver/unbind echo 0000:83:01.2 > /sys/bus/pci/devices/0000\:08\:01.2/driver/unbind echo 0000:83:01.3 > /sys/bus/pci/devices/0000\:08\:01.3/driver/unbind echo 0000:83:01.4 > /sys/bus/pci/devices/0000\:08\:01.4/driver/unbind echo 0000:83:01.5 > /sys/bus/pci/devices/0000\:08\:01.5/driver/unbind echo -n "1af4 1000" > /sys/bus/pci/drivers/${vendor_vdpa}/new_id -

绑定vDPA设备后,可以通过vdpa命令查询vdpa管理设备列表

# vdpa mgmtdev show pci/0000:83:01.1: supported_classes net pci/0000:83:01.2: supported_classes net pci/0000:83:01.3: supported_classes net pci/0000:83:01.4: supported_classes net pci/0000:83:01.5: supported_classes net -

完成vdpa设备的创建后,创建vhost-vDPA设备

vdpa dev add name vdpa0 mgmtdev pci/0000:83:01.1 vdpa dev add name vdpa1 mgmtdev pci/0000:83:01.2 vdpa dev add name vdpa2 mgmtdev pci/0000:83:01.3 vdpa dev add name vdpa3 mgmtdev pci/0000:83:01.4 vdpa dev add name vdpa4 mgmtdev pci/0000:83:01.5 -

完成vhost-vDPA的设备创建后,可以通过vdpa命令查询vdpa设备列表;也可以通过libvirt命令查询环境的vhost-vDPA设备信息

# vdpa dev show vdpa0: type network mgmtdev pci/0000:83:01.1 vendor_id 6900 max_vqs 3 max_vq_size 256 vdpa1: type network mgmtdev pci/0000:83:01.2 vendor_id 6900 max_vqs 3 max_vq_size 256 vdpa2: type network mgmtdev pci/0000:83:01.3 vendor_id 6900 max_vqs 3 max_vq_size 256 vdpa3: type network mgmtdev pci/0000:83:01.4 vendor_id 6900 max_vqs 3 max_vq_size 256 vdpa4: type network mgmtdev pci/0000:83:01.5 vendor_id 6900 max_vqs 3 max_vq_size 256 # virsh nodedev-list vdpa vdpa_vdpa0 vdpa_vdpa1 vdpa_vdpa2 vdpa_vdpa3 vdpa_vdpa4 # virsh nodedev-dumpxml vdpa_vdpa0 <device> <name>vdpa_vdpa0</name> <path>/sys/devices/pci0000:00/0000:00:0c.0/0000:83:01.1/vdpa0</path> <parent>pci_0000_83_01_1</parent> <driver> <name>vhost_vdpa</name> </driver> <capability type='vdpa'> <chardev>/dev/vhost-vdpa-0</chardev> </capability> </device> -

创建虚拟机时,在虚拟机配置文件中增加vDPA直通设备的配置项,其中,source dev为host上创建的vhost-vdpa设备的字符设备路径

<devices> <hostdev mode='subsystem' type='vdpa'> <source dev='/dev/vhost-vdpa-0'/> </hostdev> </devices> -

虚拟机创建后,可以在虚拟机内看到对应的virtio-net设备,配置IP后,网卡功能正常

# lspci | grep -i eth 05:00.0 Ethernet controller: Virtio: Virtio network device (rev 01) # ifconfig eth0 192.168.1.100 # ping 192.168.1.200 PING 192.168.1.200 (192.168.1.200) 56(84) bytes of data. 64 bytes from 192.168.1.200: icmp_seq=1 ttl=64 time=0.324 ms 64 bytes from 192.168.1.200: icmp_seq=2 ttl=64 time=0.141 ms --- 192.168.1.200 ping statistics --- 2 packets transmitted, 2 received, 0% packet loss, time 1001ms rtt min/avg/max/mdev = 0.141/0.232/0.324/0.091 ms

Release 计划

- generic vDPA基本功能实现 - 2024.08.30

- generic vDPA热迁移功能实现 - 2024.10.30

- generic vDPA相关文档输出 - 2024.11.30